import json

import together

from pydantic import BaseModel, Field

client = together.Together()

# Define the schema for the output

class VoiceNote(BaseModel):

title: str = Field(description="A title for the voice note")

summary: str = Field(description="A short one sentence summary of the voice note.")

actionItems: list[str] = Field(

description="A list of action items from the voice note"

)

def main():

transcript = (

"Good morning! It's 7:00 AM, and I'm just waking up. Today is going to be a busy day, "

"so let's get started. First, I need to make a quick breakfast. I think I'll have some "

"scrambled eggs and toast with a cup of coffee. While I'm cooking, I'll also check my "

"emails to see if there's anything urgent."

)

# Call the LLM with the JSON schema

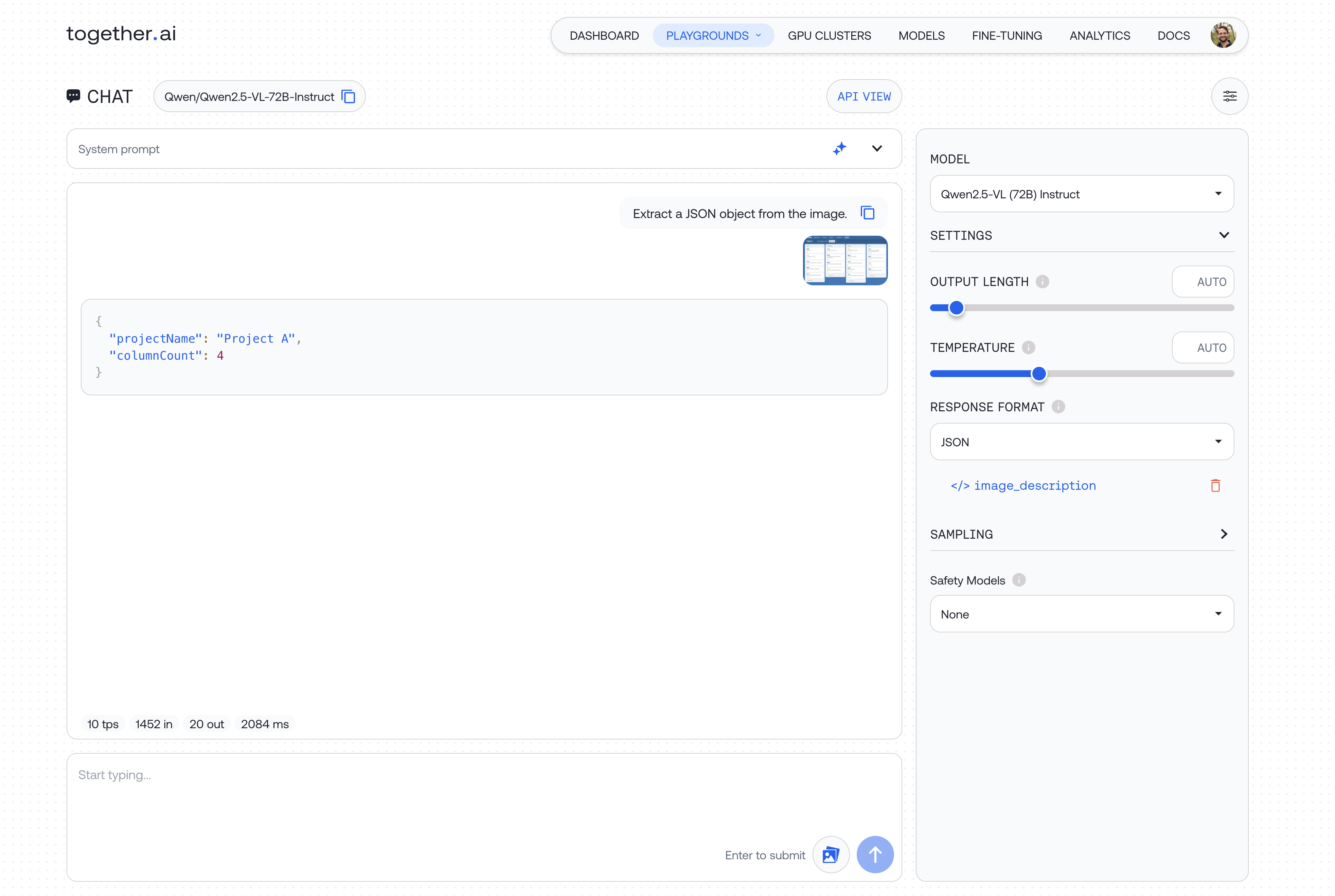

extract = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "The following is a voice message transcript. Only answer in JSON.",

},

{

"role": "user",

"content": transcript,

},

],

model="meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo",

response_format={

"type": "json_object",

"schema": VoiceNote.model_json_schema(),

},

)

output = json.loads(extract.choices[0].message.content)

print(json.dumps(output, indent=2))

return output

main()